"Gary Gorton Explains the Crisis

This financial crisis has had two legs: the initial boom and bust in housing prices, primarily in the USA, and the accelerator mechanism that then turned this crisis into a panic, affecting financial institutions almost indiscriminately, and countries furthest away from the USA (eg, Russia, Brazil), actually were among the worst performers. I think the boom and bust in the housing market was a combination of a benign history combined with a variety of forces encouraging lower mortgage lending standards under the assumption that a disparate origination statistics by race were the result of arbitrary underwriting standards, and that housing prices would not fall in aggregate (nominally, they had not really done so in the historical record). The government keeps meticulous data on loan originations by race to satisfy the Home Mortgage Disclosure Act, but does not keep track of default rates by these same categories (see here). After all, you don't want to give lenders an ability to defend their unconscionable redlining.

But until today, I was totally befuddled by the transmission mechanism, the amplifier. I mentioned that last year at this time I was at an NBER conference, and Markus Brunnemeier pointed out that the decline in housing prices was insignificant relative to the change in stock market, and this was in May 2008! Almost everyone in the audience of esteemed financial researchers agreed, and thought the market was in a curious overreaction. That is, we did not know why a loss of $X in housing valuation, caused a $10X change in the stock market--and it was only to get much worse. You see, greed, hubris, fat tails, copulas, regulatory arbitrage, do not really work. Merton's explanation that as equity prices fall, both equity values and volatility increase, presumes the equity value fall that needs explaining (after all, it is much greater than the value of the housing price fall).

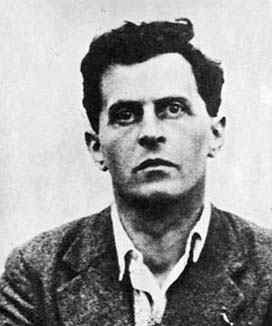

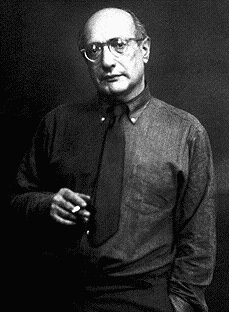

Gary Gorton makes the first really compelling explanation I have seen, and he's an economist. He is drawing on his experience as a consultant at AIG, and his experience modeling bank runs. I think this highlights that those best able to fix things should not have totally clean hands. Banks are complex institutions that have a lot of very particular attributes to their balance sheets and cash flows, and while a physicist or non-economist would be untainted by failure to foresee the crisis, this also neglects the steep learning curve they would also face. It is easier to correct experts than create them anew, groups are rarely incorrigible when it comes to big mistakes everyone sees as such (in contrast, to say a post-modern literary theorist, whose failures are less concrete). Gorton did not anticipate the 2008 events, but his analysis is much richer than those blaming complexity or hubris (Merton did a nice job of noting that ABS are actually much less complex than your average equity security given that it is a residual to a firm with lots of discretion).

The Gorton story is as follows. We have not had a true bank run since the Great Depression in the US, so we forgot what they look like. The essence of a bank run is described in those books on Bear Stearns: sparked by plausible speculation and a first mover, if you think a bank is losing other depositors, it pays to take out your deposits, and as no bank is sufficiently liquid to pay off all its depositors immediately, if this idea become popular the bank is doomed as each depositor seeks its own self-interest in getting to be first in line for their money.

In the old system, retail customers would put their money in a bank. These depositors have assets that can be used for transactional purposes, they can write a check, and have the money wired out in a matter of days. Yet, the money can be lent by bank, and using the law of large numbers, the bank can estimate their daily cash needs, keep a sufficient amount of reserves, and everything is kosher. Since the Great Depression, retail runs are no longer an issue. Yet now financial institutions are highly dependent on wholesale depositors. These are institutions, like hedge funds, and instead of depositing money in a bank, they deposit Treasuries, and more frequently, AAA rated securities (often derivatives). These depositor have overnight repo returns that can be used for transactional purposes because they can be turned to cash in a day's notice, but just like the retail story with the money, the AAA bonds the wholesale depositors leave can be lent (rehypothecated) by the bank. Retail cash was replaced by AAA debt.

Thus AAA rated bonds become cash used as deposits, and as AAA rated Asset Backed Securities had higher yields, but similar treatment to Treasuries, they gained more and more popularity. A key to these AAA rated bonds is that their default rates are, basically, zero. That is, at Moody's we estimated them at 0.01% annually. One should expect to see one or two of these securities default in one's working life. Thus, they are informationally insensitive, because they have so much safety in them, going over their risks is pointless, uninteresting. That is, as these things have reams of data that show zero default rates, and the market thinks they have almost zero default rate, it was counterproductive to get worked up about their future default rates. You would have wasted a career crying wolf about AAA-rated securities from 1940-2005.

A systemic shock to the financial system is an event thtat causes such debt to become informationally sensitive, in this case, the shock to housing prices. But when these assets become stressed, everyone is understandably perplexed. It's as if the electricity stops working, an event no one really contemplated, and it is too late for everyone to become an electrician. Thus, the concern about asset backed securities and AAA rated bonds of all kinds, become suspect. What were previously information insensitive becomes really complicated, as one works through the details of derivatives, or balance sheets of banks, that were previously assumed to have a 0.01% annualized default rate. This is because bayesian updating suggests that when you have several AAA rated defaults, the probability is that your model is incorrect, as opposed to just having bad luck, and you have to first figure out what that model is, and where it broke down (ie, is it just the housing price assumption?).

This is consistent with bank panics pre-1933 in the USA. Historically, the public becomes wary of all bank deposits, and the bank's balance sheets go from informationally insensitive to sensitive, unmasking their complexity. Nonetheless, the actual number of bank failures in these crises was comparatively low (excluding the Great Depression).

Say the banking system has assets of $100 financed by equity capital of $10, long-term debt of $40, and short term financing in the form of repo of $50. In the panic, repo haircuts rise to 20 percent as banks are wary of previously Gold AAA rated securities, amounting to a withdrawl of $10, so the system has to either shrink, borrow, or get an equity injection to make up for this. As we saw, after some early equity injections during the fall of 2007, this source dried up, as did the possibility of borrowing. That leaves asset sales. So the system as a whole needs to sell $10 of assets. They sell their tradeable securities as their liabilities decrease, and those securities are in a pricing matrix with the AAA rated collateral that is increasing its haircut. Gorton estimates the banking system needed to replace about $2 trillion of financing when the repo market haircuts rose. This is the amplifier.

The increase in haircuts basically removed liabilities from the banking system, and the prices fell on many comparable securities as banks shed assets. In the fire sale, assets are now worth $80; equity is wiped out. The system is insolvent at current market price levels.

How do we know depositors were confused about which banks were at risk? The evidence is that non-subprime related asset classes like auto loans, credit cards, and emerging market debt saw their spreads rise significantly only when the interbank market started to breakdown.

A banking panic is a systemic event because the banking system as a whole is always insolvent in such a scenario.

Thus, the problem is that repo replaced retail deposits as the center of the bank run, as AAA rated securities of all types used in these transactions were all downgraded implicitly to non-prime (below A2/P2), and in many cases based on Mortgages which historically were the safest asset class in the US. There was a bank run, but it was by the 'shadow bank sector', and so all we could see were the effects, but not the cause.

His suggestion is that we set up groups to oversee those AAA securities used in repo transactions, and perhaps put a government guarantee on them. Clearly, this will solve the 2008 crisis, but as always one needs to be looking forward. Bank crises are much less frequent in the 20th century than the 19th, but we need to be mindful of fixing the cause and not the symptom. Looked at in this narrative I marvel at how the future can be so like the past, but different enough to escape notice in real time. I suppose every war or financial crisis is like that.