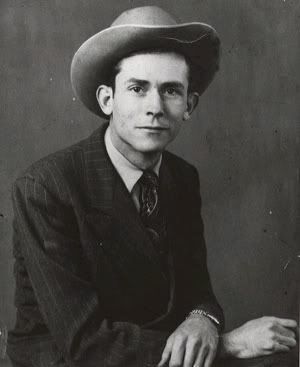

I doubt a week has gone by since last summer during which I haven't seen some pundit or other trot out Walter Bagehot's dictum that in the event of a credit crunch, the central bank should lend freely at a penalty rate. More often than not, this is contrasted with the actions of the Federal Reserve, which seems to be lending freely at very low interest rates.

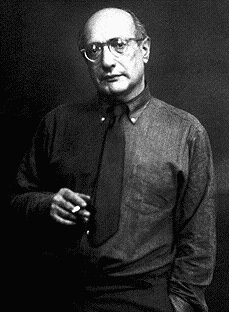

Ben Bernanke, in a speech today, addressed this criticism directly:

What are the terms at which the central bank should lend freely? Bagehot argues that "these loans should only be made at a very high rate of interest". Some modern commentators have rationalized Bagehot's dictum to lend at a high or "penalty" rate as a way to mitigate moral hazard--that is, to help maintain incentives for private-sector banks to provide for adequate liquidity in advance of any crisis. I will return to the issue of moral hazard later. But it is worth pointing out briefly that, in fact, the risk of moral hazard did not appear to be Bagehot's principal motivation for recommending a high rate; rather, he saw it as a tool to dissuade unnecessary borrowing and thus to help protect the Bank of England's own finite store of liquid assets. Today, potential limitations on the central bank's lending capacity are not nearly so pressing an issue as in Bagehot's time, when the central bank's ability to provide liquidity was far more tenuous.

I'm no expert on Walter Bagehot, and in fact I admit I've never read Lombard Street. But I'll trust in Bernanke as an economic historian on this one, unless and until someone else makes a persuasive case that Bagehot's penalty rate really was designed to punish the feckless rather than just to preserve the Bank of England's limited liquidity."

No comments:

Post a Comment