"Letter from the chair

Mon December 15, 2008

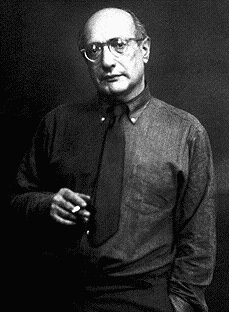

Andrew Barto, Professor and Chair

Letters from the chair are not usually about technical subjects, but as I write this I am having trouble ignoring the uncertainty we are facing both locally and around the globe. With so many researchers in Computer Science and other disciplines studying decision making under uncertainty, one might think that we would be able to turn the crank on any number of sophisticated algorithms to figure out what is the best course of action for maximizing our ‘expected utility' even while lacking complete information about the state of the world, about how our decisions might change things, and about what the unfolding future will mean for our measures of utility.

Why aren't decision theorists saving the day? Indeed, why has some of the blame for the current financial crisis been attributed to new financial instruments that owe their existence to complex theories and algorithms? There are many answers to these questions, but putting aside the big issue of how we assign utility to possible futures, the main answer is that these methods need either accurate probabilistic models or immense amounts of data, not to mention a lot of regularity in what is happening in the world. Lacking these, we can argue about whose probability estimates are the best, with our inference algorithms waiting in the wings to grind out recommendations, all the while knowing that there is no infallible way to predict the future.

But evolution has given us skills for dealing with uncertainty—not skills that necessarily help us manage our finances, but skills that have kept our ancestors alive through the millennia. It is not known exactly how we are wired to act in the face of uncertainty, but a few theories—even if false—are thought provoking. One is that the brain has different systems, mediated by different neurotransmitters, for dealing with expected uncertainty and unexpected uncertainty (A. J. Yu and P. Dayan, "Uncertainty, Neuromodulation, and Attention," Neuron, 46, 681-692, 2005). Expected uncertainty occurs when we are in a familiar environment and have had a lot of experience with its unreliable predictive relationships. We have pretty good probability estimates and strong top-down expectations. Unexpected uncertainty, on the other hand, occurs when there is a major shift in context causing our top-down expectations to be grossly wrong. According to this theory, the degree of expected uncertainty is signaled by the amount of one neurotransmitter (acetylcholine) that causes us to pay less attention to our models—our preconceptions—and more to what we are actually experiencing. The neurotransmitter that signals unexpected uncertainty (norepinephrine), on the other hand, has the additional effect of causing us to shift attention in order to search for new cues that might be predictive in the new context, thus helping us to form new models rather than just tune the old ones. Increased levels of norepinephrine are also associated with increased anxiety.

If something like this theory is correct, then what is responsible for the anxiety we are feeling is not just uncertainty; it is unexpected uncertainty. Maybe a measure of comfort can be taken from the possibility that the brain machinery causing our anxiety is also encouraging us to look beyond the information streams we have been relying on up to now as we try to form new models that can guide us as we move forward. I would put more trust in computational decision aids if they included this same kind of machinery."

No comments:

Post a Comment