"Phony Experts

Posted on: March 26, 2009 4:08 PM, by Jonah Lehrer

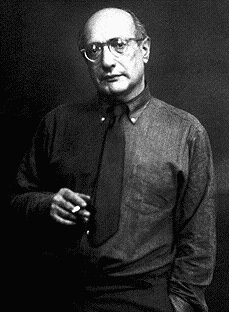

Nicholas Kristof has a great column today on Philip Tetlock and political experts, who turn out to be astonishingly bad at making accurate predictions:

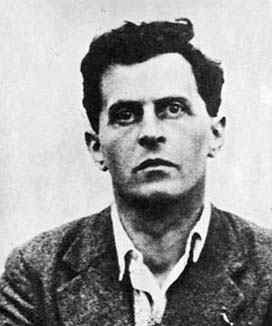

The expert on experts is Philip Tetlock, a professor at the University of California, Berkeley. His 2005 book, "Expert Political Judgment," is based on two decades of tracking some 82,000 predictions by 284 experts. The experts' forecasts were tracked both on the subjects of their specialties and on subjects that they knew little about.The result? The predictions of experts were, on average, only a tiny bit better than random guesses -- the equivalent of a chimpanzee throwing darts at a board.

"It made virtually no difference whether participants had doctorates, whether they were economists, political scientists, journalists or historians, whether they had policy experience or access to classified information, or whether they had logged many or few years of experience," Mr. Tetlock wrote.

Why are political pundits so often wrong? In my book, I devote a fair amount of ink to Tetlock's epic study. The central error diagnosed by Tetlock was the sin of certainty: pundits were so convinced they were right that they ended up neglecting all those facts suggesting they were wrong. "The dominant danger [for pundits] remains hubris, the vice of closed-mindedness, of dismissing dissonant possibilities too quickly," Tetlock writes. (This is also why the most eminent pundits were the most unreliable. Being famous led to a false sense of confidence.)

Is there a way to fix this mess? Or is cable news bound to be populated by talking heads who perform worse than random chance? As Kristof notes, Tetlock advocates the creation of a "trans-ideological Consumer Reports for punditry," which doesn't strike me as particularly feasible. Instead, I'd argue that the easiest fix is for anchors on news shows to simply spend more time asking pundits about all those predictions that turned out to be wrong. The point isn't to generate a public shaming - although that would certainly be more entertaining than most cable news shows - but to force pundits to genuflect and introspect on why they made a mistake in the first place. Were they too ideological? Were they thinking like a hedgehog? What relevant facts did they ignore? And why did they ignore them?

By asking the right questions, we can make our pundits better. They'll never be perfect, or even worth their inflated paychecks, but perhaps we can help them perform better than chimpanzees throwing darts. Tetlock ends his book with some take-home advice on what we can all learn from the failures of political experts: "We need to cultivate the art of self-overhearing," he says, "to learn how to eavesdrop on the mental conversations we have with ourselves." A big part of that mental conversation is studying the biases and habits that led us to err.

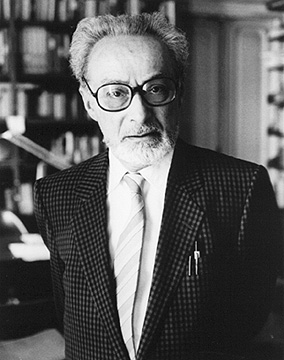

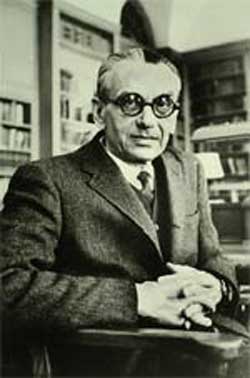

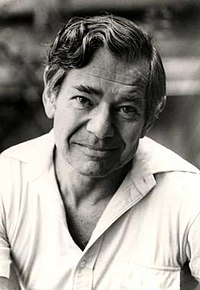

And here, via Steve, is a new study by Gregory Berns of Emory on how even bad "expert" advice can influence decision-making in the brain. Here's the Wired summary:

In the study, Berns' team hooked 24 college students to brain scanners as they contemplated swapping a guaranteed payment for a chance at a higher lottery payout. Sometimes the students made the decision on their own. At other times they received written advice from Charles Noussair, an Emory University economist who advises the U.S. Federal Reserve.I'm most interested in the reduced activity seen in the ACC, since that brain area is often associated with cognitive conflict. (It's activated, for instance, when people are presented with contradictory facts or dissonant information.) I think one way to understand the influence of experts is that, because they're "experts," we aren't as motivated to think of all the reasons they might be spouting nonsense. If so, that would be an unfortunate neural response, since all the evidence suggests they really are spouting nonsense."Though the recommendations were delivered under his imprimatur, Noussair himself wouldn't necessarily follow it. The advice was extremely conservative, often urging students to accept tiny guaranteed payouts rather than playing a lottery with great odds and a high payout. But students tended to follow his advice regardless of the situation, especially when it was bad.

When thinking for themselves, students showed activity in their anterior cingulate cortex and dorsolateral prefrontal cortex -- brain regions associated with making decisions and calculating probabilities. When given advice from Noussair, activity in those regions flat lined.

No comments:

Post a Comment