‘The story that I have to tell is marked all the way through by a persistent tension between those who assert that the best decisions are based on( 1 ) quantification and numbers, determined by the patterns of the past, and those who base their decisions on( 2 ) more subjective degrees of belief about the uncertain future. This is a controversy that has never been resolved( TRUE ).’

— FROM THE INTRODUCTION TO ‘‘AGAINST THE GODS: THE REMARKABLE STORY OF RISK,’’ BY PETER L. BERNSTEIN

THERE AREN’T MANY widely told anecdotes about the current financial crisis, at least not yet, but there’s one that made the rounds in 2007, back when the big investment banks were first starting to write down billions of dollars in mortgage-backed derivatives and other so-called toxic securities. This was well before Bear Stearns collapsed, before Fannie Mae and Freddie Mac were taken over by the federal government, before Lehman fell and Merrill Lynch was sold and A.I.G. saved, before the $700 billion bailout bill was rushed into law. Before, that is, it became obvious that the risks taken by the largest banks and investment firms in the United States — and, indeed, in much of the Western world — were so excessive and foolhardy that they threatened to bring down the financial system itself. On the contrary: this was back when the major investment firms were still assuring investors that all was well, these little speed bumps notwithstanding — assurances based, in part, on their fantastically complex mathematical models( THIS IS WHERE THE REAL COMPLEXITY IS ) for measuring the risk in their various portfolios.

There are many such models, but by far the most widely used is called VaR — Value at Risk. Built around statistical ideas and probability theories that have been around for centuries, VaR was developed and popularized in the early 1990s by a handful of scientists and mathematicians — “quants,” they’re called in the business — who went to work for JPMorgan. VaR’s great appeal, and its great selling point to people who do not happen to be quants, is that it expresses risk as a single number, a dollar figure, no less.

VaR isn’t one model but rather a group of related models that share a mathematical framework. In its most common form, it measures the boundaries of risk in a portfolio over short durations, assuming a “normal” market. For instance, if you have $50 million of weekly VaR, that means that over the course of the next week, there is a 99 percent chance that your portfolio won’t lose more than $50 million. That portfolio could consist of equities, bonds, derivatives or all of the above; one reason VaR became so popular is that it is the only commonly used risk measure that can be applied to just about any asset class. And it takes into account a head-spinning variety of variables, including diversification, leverage and volatility, that make up the kind of market risk that traders and firms face every day.

Another reason VaR is so appealing is that it can measure both individual risks — the amount of risk contained in a single trader’s portfolio, for instance — and firmwide risk, which it does by combining the VaRs of a given firm’s trading desks and coming up with a net number. Top executives usually know their firm’s daily VaR within minutes of the market’s close.

Risk managers use VaR to quantify their firm’s risk positions to their board. In the late 1990s, as the use of derivatives was exploding, the Securities and Exchange Commission ruled that firms had to include a quantitative disclosure of market risks in their financial statements for the convenience of investors, and VaR became the main tool for doing so. Around the same time, an important international rule-making body, the Basel Committee on Banking Supervision, went even further to validate VaR by saying that firms and banks could rely on their own internal VaR calculations to set their capital requirements. So long as their VaR was reasonably low, the amount of money they had to set aside to cover risks that might go bad could also be low.

Given the calamity that has since occurred, there has been a great deal of talk, even in quant circles, that this widespread institutional reliance on VaR was a terrible mistake. At the very least, the risks that VaR measured did not include the biggest risk of all: the possibility of a financial meltdown. “Risk modeling didn’t help as much as it should have,” says Aaron Brown, a former risk manager at Morgan Stanley who now works at AQR, a big quant-oriented hedge fund. A risk consultant named Marc Groz says, “VaR is a very limited tool.” David Einhorn, who founded Greenlight Capital, a prominent hedge fund, wrote not long ago that VaR was “relatively useless as a risk-management tool and potentially catastrophic when its use creates a false sense of security among senior managers and watchdogs. This is like an air bag that works all the time, except when you have a car accident.” Nassim Nicholas Taleb, the best-selling author of “The Black Swan,” has crusaded against VaR for more than a decade. He calls it, flatly, “a fraud.”

How then do we account for that story that made the rounds in the summer of 2007? It concerns Goldman Sachs, the one Wall Street firm that was not, at that time, taking a hit for billions of dollars of suddenly devalued mortgage-backed securities. Reporters wanted to understand how Goldman had somehow sidestepped the disaster that had befallen everyone else. What they discovered was that in December 2006, Goldman’s various indicators, including VaR and other risk models, began suggesting that something was wrong. Not hugely wrong, mind you, but wrong enough to warrant a closer look.

“We look at the P.& L. of our businesses every day,” said Goldman Sachs’ chief financial officer, David Viniar, when I went to see him recently to hear the story for myself. (P.& L. stands for profit and loss.) “We have lots of models here that are important, but none are more important than the P.& L., and we check every day to make sure our P.& L. is consistent with where our risk models say it should be. In December our mortgage business lost money for 10 days in a row. It wasn’t a lot of money, but by the 10th day we thought that we should sit down and talk about it.”

So Goldman called a meeting of about 15 people, including several risk managers and the senior people on the various trading desks. They examined a thick report that included every trading position the firm held. For the next three hours, they pored over everything. They examined their VaR numbers and their other risk models. They talked about how the mortgage-backed securities market “felt.” “Our guys said that it felt like it was going to get worse before it got better,” Viniar recalled. “So we made a decision: let’s get closer to home.”

In trading parlance, “getting closer to home” means reining in the risk, which in this case meant either getting rid of the mortgage-backed securities or hedging the positions so that if they declined in value, the hedges would counteract the loss with an equivalent gain. Goldman did both. And that’s why, back in the summer of 2007, Goldman Sachs avoided the pain that was being suffered by Bear Stearns, Merrill Lynch, Lehman Brothers and the rest of Wall Street.

The story was told and retold in the business pages. But what did it mean, exactly? The question was always left hanging. Was it an example of the futility of risk modeling or its utility? Did it show that risk models, properly understood, were not a fraud after all but a potentially important signal that trouble was brewing? Or did it suggest instead that a handful of human beings at Goldman Sachs acted wisely by putting their models aside and making “decisions on more subjective degrees of belief about an uncertain future,” as Peter L. Bernstein put it in “Against the Gods?”

To put it in blunter terms, could VaR and the other risk models Wall Street relies on have helped prevent the financial crisis if only Wall Street paid better attention to them? Or did Wall Street’s reliance on them help lead us into the abyss?

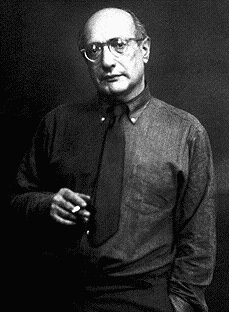

One Saturday a few months ago, Taleb, a trim, impeccably dressed, middle-aged man — inexplicably, he won’t give his age — walked into a lobby in the Columbia Business School and headed for a classroom to give a guest lecture. Until that moment, the lobby was filled with students chatting and eating a quick lunch before the afternoon session began, but as soon as they saw Taleb, they streamed toward him, surrounding him and moving with him as he slowly inched his way up the stairs toward an already-crowded classroom. Those who couldn’t get in had to make do with the next classroom over, which had been set up as an overflow room. It was jammed, too.

It’s not every day that an options trader becomes famous by writing a book, but that’s what Taleb did, first with “Fooled by Randomness,” which was published in 2001 and became an immediate cult classic on Wall Street, and more recently with “The Black Swan: The Impact of the Highly Improbable,” which came out in 2007 and landed on a number of best-seller lists. He also went from being primarily an options trader to what he always really wanted to be: a public intellectual. When I made the mistake of asking him one day whether he was an adjunct professor, he quickly corrected me. “I’m the Distinguished Professor of Risk Engineering at N.Y.U.,” he responded. “It’s the highest title they give in that department.” Humility is not among his virtues. On his Web site he has a link that reads, “Quotes from ‘The Black Swan’ that the imbeciles did not want to hear.”

“How many of you took statistics at Columbia?” he asked as he began his lecture. Most of the hands in the room shot up. “You wasted your money,” he sniffed. Behind him was a slide of Mickey Mouse that he had put up on the screen, he said, because it represented “Mickey Mouse probabilities.” That pretty much sums up his view of business-school statistics and probability courses.

Taleb’s ideas can be difficult to follow, in part because he uses the language of academic statisticians; words like “Gaussian,” “kurtosis” and “variance” roll off his tongue. But it’s also because he speaks in a kind of brusque shorthand, acting as if any fool should be able to follow his train of thought, which he can’t be bothered to fully explain.

“This is a Stan O’Neal trade,” he said, referring to the former chief executive of Merrill Lynch. He clicked to a slide that showed a trade that made slow, steady profits — and then quickly spiraled downward for a giant, brutal loss.

“Why do people measure risks against events that took place in 1987?” he asked, referring to Black Monday, the October day when the U.S. market lost more than 20 percent of its value and has been used ever since as the worst-case scenario in many risk models. “Why is that a benchmark? I call it future-blindness.

“If you have a pilot flying a plane who doesn’t understand there can be storms, what is going to happen?” he asked. “He is not going to have a magnificent flight. Any small error is going to crash a plane. This is why the crisis that happened was predictable.”

Eventually, though, you do start to get the point. Taleb says that Wall Street risk models, no matter how mathematically sophisticated, are bogus; indeed, he is the leader of the camp that believes that risk models have done far more harm than good. And the essential reason for this is that the greatest risks are never the ones you can see and measure, but the ones you can’t see and therefore can never measure. The ones that seem so far outside the boundary of normal probability that you can’t imagine they could happen in your lifetime — even though, of course, they do happen, more often than you care to realize. Devastating hurricanes happen. Earthquakes happen. And once in a great while, huge financial catastrophes happen. Catastrophes that risk models somehow always manage to miss.

VaR is Taleb’s favorite case in point. The original VaR measured portfolio risk along what is called a “normal distribution curve,” a statistical measure that was first identified by Carl Friedrich Gauss in the early 1800s (hence the term “Gaussian”). It is a simple bell curve of the sort we are all familiar with.

The reason the normal curve looks the way it does — why it rises as it gets closer to the middle — is that the closer you get to that point, the smaller the change in the thing you’re measuring, and hence the more frequently it is likely to occur. A typical stock or portfolio of stocks, for example, is far likelier to gain or lose one point in a day (or a week) than it is to gain or lose 20 points. So the pattern of normal distribution will cluster around those smaller changes toward the middle of the curve, while the less-frequent distributions will fall along the ends of the curve.

VaR uses this normal distribution curve to plot the riskiness of a portfolio. But it makes certain assumptions. VaR is often measured daily and rarely extends beyond a few weeks, and because it is a very short-term measure, it assumes that tomorrow will be more or less like today. Even what’s called “historical VaR” — a variation of standard VaR that measures potential portfolio risk a year or two out, only uses the previous few years as its benchmark. As the risk consultant Marc Groz puts it, “The years 2005-2006,” which were the culmination of the housing bubble, “aren’t a very good universe for predicting what happened in 2007-2008.”

This was one of Alan Greenspan’s primary excuses when he made his mea culpa for the financial crisis before Congress a few months ago. After pointing out that a Nobel Prize had been awarded for work that led to some of the theories behind derivative pricing and risk management, he said: “The whole intellectual edifice, however, collapsed in the summer of last year because the data input into the risk-management models generally covered only the past two decades, a period of euphoria. Had instead the models been fitted more appropriately to historic periods of stress, capital requirements would have been much higher and the financial world would be in far better shape today, in my judgment.” Well, yes. That was also the point Taleb was making in his lecture when he referred to what he called future-blindness. People tend not to be able to anticipate a future they have never personally experienced.

Yet even faulty historical data isn’t Taleb’s primary concern. What he cares about, with standard VaR, is not the number that falls within the 99 percent probability. He cares about what happens in the other 1 percent, at the extreme edge of the curve. The fact that you are not likely to lose more than a certain amount 99 percent of the time tells you absolutely nothing about what could happen the other 1 percent of the time. You could lose $51 million instead of $50 million — no big deal. That happens two or three times a year, and no one blinks an eye. You could also lose billions and go out of business. VaR has no way of measuring which it will be.

What will cause you to lose billions instead of millions? Something rare, something you’ve never considered a possibility. Taleb calls these events “fat tails” or “black swans,” and he is convinced that they take place far more frequently than most human beings are willing to contemplate. Groz has his own way of illustrating the problem: he showed me a slide he made of a curve with the letters “T.B.D.” at the extreme ends of the curve. I thought the letters stood for “To Be Determined,” but that wasn’t what Groz meant. “T.B.D. stands for ‘There Be Dragons,’ ” he told me.

And that’s the point. Because we don’t know what a black swan might look like or when it might appear and therefore don’t plan for it, it will always get us in the end. “Any system susceptible to a black swan will eventually blow up,” Taleb says. The modern system of world finance, complex and interrelated and opaque, where what happened yesterday can and does affect what happens tomorrow, and where one wrong tug of the thread can cause it all to unravel, is just such a system.

“I have been calling for the abandonment of certain risk measures since 1996 because they cause people to cross the street blindfolded,” he said toward the end of his lecture. “The system went bust because nobody listened to me.”

After the lecture, the professor who invited Taleb to Columbia took a handful of people out for a late lunch at a nearby diner. Somewhat surprisingly, given Taleb’s well-known scorn for risk managers, the professor had also invited several risk managers who worked at two big investment banks. We had barely been seated before they tried to engage Taleb in a debate over the value of VaR. But Taleb is impossible to argue with on this subject; every time they raised an objection to his argument, he curtly dismissed them out of hand. “VaR can be useful,” said one of the risk managers. “It depends on how you use it. It can be useful in identifying trends.”

“This argument is addressed in ‘The Black Swan,’ ” Taleb retorted. “Not a single person has offered me an argument I haven’t heard.”

“I think VaR is great,” said another risk manager. “I think it is a fantastic tool. It’s like an altimeter in aircraft. It has some margin for error, but if you’re a pilot, you know how to deal with it. But very few pilots give up using it.”

Taleb replied: “Altimeters have errors that are Gaussian. You can compensate. In the real world, the magnitude of errors is much less known.”

Around and around they went, talking past each other for the next hour or so. It was engaging but unsatisfying; it didn’t help illuminate the role risk management played in the crisis.

The conversation had an energizing effect on Taleb, however. He walked out of the diner with a full head of steam, railing about the two “imbeciles” he just had to endure. I used the moment to ask if he knew the people at RiskMetrics, a successful risk-management consulting firm that spun out of the original JPMorgan quant effort in the mid-1990s. “They’re intellectual charlatans,” he replied dismissively. “You can quote me on that.”

As we approached his car, he began talking about his own performance in 2008. Although he is no longer a full-time trader, he remains a principal in a hedge fund he helped found, Black Swan Protection Protocol. His fund makes trades that either gain or lose small amounts of money in normal times but can make oversize gains when a black swan appears. Taleb likes to say that, as a trader, he has made money only three times in his life — in the crash of 1987, during the dot-com bust more than a decade later and now. But all three times he has made a killing. With the world crashing around it, his fund was up 65 to 115 percent for the year. Taleb chuckled. “They wouldn’t listen to me,” he said finally. “So I decided, to hell with them, I’ll take their money instead.”

“VaR WAS INEVITABLE,” Gregg Berman of RiskMetrics said when I went to see him a few days later. He didn’t sound like an intellectual charlatan. His explanation of the utility of VaR — and its limitations — made a certain undeniable sense. He did, however, sound like somebody who was completely taken aback by the amount of blame placed on risk modeling since the financial crisis began.

“Obviously, we are big proponents of risk models,” he said. “But a computer does not do risk modeling. People do it( FINALLY, THE MAIN POINT ). And people got overzealous and they stopped being careful. They took on too much leverage( VERY TRUE. BUT THAT WAS THE POINT OF CDSs AND CDOs IN THE FIRST PLACE. IN OTHER WORDS, LEVERAGE WASN'T A BYPRODUCT, IT WAS THE GOAL. ). And whether they had models that missed that, or they weren’t paying enough attention, I don’t know. But I do think that this was much more a failure of management than of risk management. I think blaming models for this would be very unfortunate because you are placing blame on a mathematical equation. You can’t blame math( I AGREE COMPLETELY. HOWEVER, MY VERSION OF TALEB IS THAT HE IS SAYING THE SAME THING. ),” he added with some exasperation.

Although Berman, who is 42, was a founding partner of RiskMetrics, it turned out that he was one of the few at the firm who hadn’t come from JPMorgan. Still, he knew the back story. How could he not? It was part of the lore of the place. Indeed, it was part of the lore of VaR.

The late 1980s and the early 1990s were a time when many firms were trying to devise more sophisticated risk models because the world was changing around them. Banks, whose primary risk had long been credit risk — the risk that a loan might not be paid back( THAT IS IN FACT THE WHAT THE PROBLEM WITH SUBPRIME AND OTHER MORTGAGES IS. THESE BAD LOANS CANNOT BE BLAMED ON MATH MODELS. IT IS STRAIGHTFORWARD CREDIT RISK. ) — were starting to meld with investment banks, which traded stocks and bonds. Derivatives and securitizations — those pools of mortgages or credit-card loans that were bundled by investment firms and sold to investors — were becoming an increasingly important component of Wall Street. But they were devilishly complicated to value( THEN THEY ARE INHERENTLY RISKY. WHY DO YOU THINK PEOPLE WANT US TREASURIES NOW? ONE REASON IS THAT THE ARE LIQUID=LESS RISKY ). For one thing, many of the more arcane instruments didn’t trade very often, so you had to try to value them by finding a comparable security that did trade( RISKY ). And they were sliced into different pieces — tranches they’re called — each of which had a different risk component( REALLY NO DIFFERENT THAN DIFFERENT GRADES OF BONDS. ). In addition every desk had its own way of measuring risk that was largely incompatible with every other desk.

JPMorgan’s chairman at the time VaR took off was a man named Dennis Weatherstone. Weatherstone, who died in 2008 at the age of 77, was a working-class Englishman who acquired the bearing of a patrician during his long career at the bank. He was soft-spoken, polite, self-effacing. At the point at which he took over JPMorgan, it had moved from being purely a commercial bank into one of these new hybrids. Within the bank, Weatherstone had long been known as an expert on risk, especially when he was running the foreign-exchange trading desk. But as chairman, he quickly realized that he understood far less about the firm’s overall risk than he needed to. Did the risk in JPMorgan’s stock portfolio cancel out the risk being taken by its bond portfolio — or did it heighten those risks? How could you compare different kinds of derivative risks? What happened to the portfolio when volatility increased or interest rates rose? How did currency fluctuations affect the fixed-income instruments? Weatherstone had no idea what the answers were. He needed a way to compare the risks of those various assets and to understand what his companywide risk was.( I'M NOT SURE THAT I UNDERSTAND THIS. IF NOTHING IS RISKY, HOW DOES IT ADD UP TO RISKY? )

The answer the bank’s quants had come up with was Value at Risk. To phrase it that way is to make it sound as if a handful of math whizzes locked themselves in a room one day, cranked out some formulas, and — presto! — they had a risk-management system. In fact, it took around seven years, according to Till Guldimann, an elegant, Swiss-born, former JPMorgan banker who ran the team that devised VaR and who is now vice chairman of SunGard Data Systems. “VaR is not just one invention,” he said. “You solved one problem and another cropped up. At first it seemed unmanageable. But as we refined it, the methodologies got better.”

Early on, the group decided that it wanted to come up with a number it could use to gauge the possibility that any kind of portfolio could lose a certain amount of money over the next 24 hours, within a 95 percent probability. (Many firms still use the 95 percent VaR, though others prefer 99 percent.) That became the core concept. When the portfolio changed, as traders bought and sold securities the next day, the VaR was then recalculated, allowing everyone to see whether the new trades had added to, or lessened, the firm’s risk( THIS MAKES SENSE ).

“There was a lot of suspicion internally,” recalls Guldimann, because traders and executives — nonquants — didn’t believe that such a thing could be quantified mathematically. But they were wrong. Over time, as VaR was proved more correct than not day after day, quarter after quarter, the top executives came not only to believe in it but also to rely on it( VERY BAD ).

For instance, during his early years as a risk manager, pre-VaR, Guldimann often confronted the problem of what to do when a trader had reached his trading limit but believed he should be given more capital to play out his hand. “How would I know if he should get the increase?” Guldimann says. “All I could do is ask around. Is he a good guy? Does he know what he’s doing? It was ridiculous. Once we converted all the limits to VaR limits, we could compare. You could look at the profits the guy made and compare it to his VaR. If the guy who asked for a higher limit was making more money with lower VaR” — that is, with less risk — “it was a good basis to give him the money.”( THIS DOES MAKE SENSE )

By the early 1990s, VaR had become such a fixture at JPMorgan that Weatherstone instituted what became known as the 415 report because it was handed out every day at 4:15, just after the market closed. It allowed him to see what every desk’s estimated profit and loss was, as compared to its risk, and how it all added up for the entire firm. True, it didn’t take into account Taleb’s fat tails, but nobody really expected it to do that( YES ). Weatherstone had been a trader himself; he understood both the limits and the value of VaR. It told him things he hadn’t known before. He could use it to help him make judgments( YES ) about whether the firm should take on additional risk or pull back. And that’s what he did.

What caused VaR to catapult above the risk systems being developed by JPMorgan competitors was what the firm did next: it gave VaR away. In 1993, Guldimann made risk the theme of the firm’s annual client conference. Many of the clients were so impressed with the JPMorgan approach that they asked if they could purchase the underlying system. JPMorgan decided it didn’t want to get into that business, but proceeded instead to form a small group, RiskMetrics, that would teach the concept to anyone who wanted to learn it, while also posting it on the Internet so that other risk experts could make suggestions to improve it. As Guldimann wrote years later, “Many wondered what the bank was trying to accomplish by giving away ‘proprietary’ methodologies and lots of data, but not selling any products or services.” He continued, “It popularized a methodology and made it a market standard, and it enhanced the image of JPMorgan.”

JPMorgan later spun RiskMetrics off into its own consulting company. By then, VaR had become so popular that it was considered the risk-model gold standard. Here was the odd thing, though: the month RiskMetrics went out on its own, September 1998, was also when Long-Term Capital Management “blew up.” L.T.C.M. was a fantastically successful hedge fund famous for its quantitative trading approach and its belief, supposedly borne out by its risk models, that it was taking minimal risk.( THIS IS A VERY IMPORTANT POINT )

L.T.C.M.’s collapse would seem to make a pretty good case for Taleb’s theories. What brought the firm down was a black swan it never saw coming: the twin financial crises in Asia and Russia. Indeed, so sure were the firm’s partners that the market would revert to “normal”( THIS IS SILLY. ) — which is what their model insisted would happen — that they continued to take on exposures that would destroy the firm as the crisis worsened, according to Roger Lowenstein’s account of the debacle, “When Genius Failed.” Oh, and another thing: among the risk models the firm relied on was VaR.

Aaron Brown, the former risk manager at Morgan Stanley, remembers thinking that the fall of L.T.C.M. could well lead to the demise of VaR. “It thoroughly punctured the myth that VaR was invincible( TRUE ),” he said. “Something that fails to live up to perfection is more despised than something that was never idealized in the first place.” After the 1987 market crash, for example, portfolio insurance, which had been sold by Wall Street as a risk-mitigation device, became largely discredited.

But that didn’t happen with VaR. There was so much schadenfreude associated with L.T.C.M. — it had Nobel Prize winners among its partners! — that it was easy for the rest of Wall Street to view its fall as an example of comeuppance. And for a hedge fund that promoted the ingeniousness of its risk measures, it took far greater risks than it ever acknowledged.

For these reasons, other firms took to rationalizing away the fall of L.T.C.M.; they viewed it as a human failure( IT WAS ) rather than a failure of risk modeling. The collapse only amplified the feeling on Wall Street that firms needed to be able to understand their risks for the entire firm. Only VaR could do that. And finally, there was a belief among some, especially after the crisis abated, that the events that brought down L.T.C.M. were one in a million. We would never see anything like that again in our lifetime( SILLY ).

So instead of diminishing in importance, VaR become a more important part of the financial scene. The Securities and Exchange Commission, for instance, worried about the amount of risk that derivatives posed to the system, mandated that financial firms would have to disclose that risk to investors( GOOD ), and VaR became the de facto measure( BAD ). If the VaR number increased from year to year in a company’s annual report, it meant the firm was taking more risk. Rather than doing anything to limit the growth of derivatives, the agency concluded( PEOPLE DID ) that disclosure, via VaR, was sufficient.

That, in turn, meant that even firms that had resisted VaR now succumbed. It meant that chief executives of big banks and investment firms had to have at least a passing familiarity with VaR. It meant that traders all had to understand the VaR consequences of making a big bet or of changing their portfolios. Some firms continued to use VaR as a tool while adding other tools as well, like “stress” or “scenario” tests, to see where the weak links in the portfolio were or what might happen if the market dropped drastically. But others viewed VaR as the primary measure they had to concern themselves with.

VaR, in other words, became institutionalized. RiskMetrics went from having a dozen risk-management clients to more than 600. Lots of competitors sprouted up. Long-Term Capital Management became an increasingly distant memory, overshadowed by the Internet boom and then the housing boom. Corporate chieftains like Stanley O’Neal at Merrill Lynch and Charles Prince at Citigroup pushed( YES ) their divisions to take more risk because they were being left behind in the race for trading profits. All over Wall Street, VaR numbers increased, but it still all seemed manageable — and besides, nothing bad was happening!

VaR also became a crutch. When an international banking group that advises national regulators decided the world needed more sophisticated ways to gauge the amount of capital that firms had to hold, Wall Street firms lobbied the group to allow them to use their internal VaR numbers. Ultimately, the group came up with an accord that allowed just that. It doesn’t seem too strong to say that as a direct result( ACTUALLY IT DOES, BECAUSE YOU'RE FOREGETTING THAT LOWER CAPITAL STANDARDS WAS THE WHOLE POINT OF THE EXERCISE. THESE ARE MERELY RATIONALIZATIONS. THEY PROVIDED A VENEER OF SAFETY TO INHERENTLY RISKY INVESTMENTS. ), banks didn’t have nearly enough capital when the black swan began to emerge in the spring of 2007.

ONE THING THAT surprised me, as I made the rounds of risk experts, was that if you listened closely, their views weren’t really that far from Taleb’s diagnosis of VaR. They agreed with him that VaR didn’t measure the risk of a black swan. And they were critical in other ways as well. Yes, the old way of measuring capital requirements needed updating, but it was crazy to base it on a firm’s internal VaR, partly because that VaR was not set by regulators and partly because it obviously didn’t gauge the kind of extreme events that destroy capital and create a liquidity crisis — precisely the moment when you need cash on hand.( I AGREE )

Indeed, Ethan Berman, the chief executive of RiskMetrics (and no relation to Gregg Berman), told me that one of VaR’s flaws, which only became obvious in this crisis, is that it didn’t measure liquidity risk( A CALLING RUN ) — and of course a liquidity crisis is exactly what we’re in the middle of right now. One reason nobody seems to know how to deal with this kind of crisis is because nobody envisioned it.( WAIT. THAT WAS WHAT LTCM WAS. A CALLING RUN WAS EXACTLY WHAT THEY SHOULD HAVE FEARED SINCE THEY WERE, AND READ THIS CAREFULLY, LOWERING CAPITAL STANDARDS. IS THERE ANYTHING COMPLICATED IN WHAT I'M SAYING? )

In a crisis, Brown, the risk manager at AQR, said, “you want to know who can kill you and whether or not they will and who you can kill if necessary. You need to have an emergency backup plan that assumes everyone is out to get you. In peacetime, you think about other people’s intentions. In wartime, only their capabilities matter. VaR is a peacetime statistic( SILLY. IT WAS A TOOL THAT WAS MISUSED. ).”

VaR DIDN’T GET EVERYTHING right even in what it purported to measure. All the triple-A-rated mortgage-backed securities churned out by Wall Street firms and that turned out to be little more than junk? VaR didn’t see the risk because it generally relied on a two-year data history( THAT ALONE SHOULD LEAD YOU TO DISTRUST IT FOR ANY MAJOR RISK ASSESSMENT. COME ON. ). Although it took into account the increased risk brought on by leverage( OBVIOUSLY IT DIDN'T, BECAUSE IT DIDN'T EVEN CONTEMPLATE A CALLING RUN. ), it failed to distinguish between leverage that came from long-term, fixed-rate debt — bonds and such that come due at a set date — and loans that can be called in at any time and can, as Brown put it “blow you up in two minutes.” That is, the kind of leverage that disappeared the minute something bad arose.( A CALLING RUN. )

“The old adage, ‘garbage in, garbage out’ certainly applies,” Groz said. “When you realize that VaR is using tame historical data to model a wildly different environment, the total losses of Bear Stearns’ hedge funds become easier to understand. It’s like the historic data only has rainstorms and then a tornado hits( ACTUALLY THEY USED WEATHER DATA TO MODEL SOME OF THE RISK. SILLY. ).”

Guldimann, the great VaR proselytizer, sounded almost mournful when he talked about what he saw as another of VaR’s shortcomings. To him, the big problem was that it turned out that VaR could be gamed( FRAUD ). That is what happened when banks began reporting their VaRs. To motivate managers, the banks began to compensate them not just for making big profits but also for making profits with low risks. That sounds good in principle, but managers began to manipulate( FRAUD ) the VaR by loading up on what Guldimann calls “asymmetric risk positions.” These are products or contracts that, in general, generate small gains and very rarely have losses. But when they do have losses, they are huge. These positions made a manager’s VaR look good because VaR ignored the slim likelihood of giant losses, which could only come about in the event of a true catastrophe. A good example was a credit-default swap, which is essentially insurance that a company won’t default( WAIT A MINUTE. THE WHOLE POINT OF A CDS IS TO CONTEMPLATE FORECLOSURE OR DEFAULT. ). The gains made from selling credit-default swaps are small and steady — and the chance of ever having to pay off that insurance was assumed to be minuscule( SILLY ). It was outside the 99 percent probability, so it didn’t show up in the VaR number. People didn’t see the size of those hidden positions lurking in that 1 percent that VaR didn’t measure.

EVEN MORE CRITICAL, it did not properly account for leverage( AGAIN. THAT'S THE WHOLE POINT OF A CDS AS OPPOSED TO JUST REGULAR INSURANCE. ) that was employed through the use of options. For example, said Groz, if an asset manager borrows money to buy shares of a company, the VaR would usually increase. But say he instead enters into a contract that gives someone the right to sell him those shares at a lower price at a later time — a put option. In that case, the VaR might remain unchanged. From the outside, he would look as if he were taking no risk, but in fact, he is( THAT'S NEGLIGENCE. ). If the share price of the company falls steeply, he will have lost a great deal of money. Groz called this practice “stuffing risk into the tails.”

And yet, instead of dismissing VaR as worthless, most of the experts I talked to defended it. The issue, it seemed to me, was less what VaR did and did not do, but how you thought about it. Taleb says that because VaR didn’t measure the 1 percent, it was worse than useless — it was downright harmful( IN THE WRONG HANDS. HE'S CALLING THESE PEOPLE IDIOTS. ). But most of the risk experts said there was a great deal to be said for being able to manage risk 99 percent of the time, however imperfectly, even though it meant you couldn’t account for the last 1 percent.

“If you say that all risk is unknowable,” Gregg Berman said, “you don’t have the basis of any sort of a bet or a trade. You cannot buy and sell anything unless you have some idea of the expectation of how it will move( TRUE ).” In other words, if you spend all your time thinking about black swans, you’ll be so risk averse you’ll never do a trade. Brown put it this way: “NT” — that is how he refers to Nassim Nicholas Taleb — “says that 1 percent will dominate your outcomes. I think the other 99 percent does matter. There are things you can do to control your risk( TRUE ). To not use VaR is to say that I won’t care about the 99 percent, in which case you won’t have a business. That is true even though you know the fate of the firm is going to be determined by some huge event. When you think about disasters, all you can rely on is the disasters of the past. And yet you know that it will be different in the future. How do you plan for that?”( BAGEHOT'S PRINCIPLES SIR )

One risk-model critic, Richard Bookstaber, a hedge-fund risk manager and author of “A Demon of Our Own Design,” ranted about VaR for a half-hour over dinner one night. Then he finally said, “If you put a gun to my head and asked me what my firm’s risk was, I would use VaR.” VaR may have been a flawed number, but it was the best number anyone had come up with.

Of course, the experts I was speaking to were, well, experts. They had a deep understanding of risk modeling and all its inherent limitations. They thought about it all the time. Brown even thought VaR was good when the numbers seemed “off,” or when it started to “miss” on a regular basis — it either meant that there was something wrong with the way VaR was being calculated, or it meant the market was no longer acting “normally.” Either way, he said, it told you something useful( THAT'S ALL THAT MODELS OR THEORIES CAN EVER DO ).

“When I teach it,” Christopher Donohue, the managing director of the research group at the Global Association of Risk Professionals, said, “I immediately go into the shortcomings. You can’t calculate a VaR number and think you know everything you need( TRUE ). On a day-to-day basis I don’t care so much that the VaR is 42. I care about where it was yesterday and where it is going tomorrow. What direction is the risk going?” Then he added, “That is probably another danger: because we put a dollar number to it, they attach a meaning to it( GOOD POINT ).”

By “they,” Donohue meant everyone who wasn’t a risk manager or a risk expert. There were the investors who saw the VaR numbers in the annual reports but didn’t pay them the least bit of attention. There were the regulators who slept soundly in the knowledge that, thanks to VaR, they had the whole risk thing under control. There were the boards who heard a VaR number once or twice a year and thought it sounded good. There were chief executives like O’Neal and Prince. There was everyone, really, who, over time, forgot that the VaR number was only meant to describe what happened 99 percent of the time. That $50 million wasn’t just the most you could lose 99 percent of the time. It was the least you could lose 1 percent of the time. In the bubble, with easy profits being made and risk having been transformed into mathematical conceit, the real meaning of risk had been forgotten. Instead of scrutinizing VaR for signs of impending trouble, they took comfort in a number and doubled down, putting more money at risk in the expectation of bigger gains. “It has to do with the human condition,” said one former risk manager. “People like to have one number they can believe in( THIS IS ANOTHER WAY OF SAYING THAT PEOPLE WERE PREDISPOSED TO EXTEND RISK, AND VAR GAVE THEM THE EXCUSE. HOWEVER, NOTHING FORCES YOU TO EXTEND RISK OR THROW OUT INVESTING AND BANKING COMMON SENSE. ).”

Brown told me: “You absolutely could see it coming( I AGREE ). You could see the risks rising( I AGREE ). However, in the two years before the crisis hit, instead of preparing for it, the opposite took place to an extreme degree. The real trouble we got into today is because of things that took place in the two years before, when the risk measures were saying that things were getting bad( I AGREE ).”

At most firms, risk managers are not viewed as “profit centers,” so they lack the clout of the moneymakers on the trading desks. That was especially true at the tail end of the bubble, when firms were grabbing for every last penny of profit.

At the height of the bubble, there was so much money to be made that any firm that pulled back because it was nervous about risk would forsake huge short-term gains and lose out to less cautious rivals. The fact that VaR didn’t measure the possibility of an extreme event was a blessing to the executives. It made black swans all the easier to ignore( NEGLIGENCE ). All the incentives — profits, compensation, glory, even job security — went in the direction of taking on more and more risk, even if you half suspected it would end badly( THIS IS WHERE GOVERNMENT GUARANTEES COME IN ). After all, it would end badly for everyone else too( THIS IS WHERE GOVERNMENT GUARANTEES COME IN ). As the former Citigroup chief executive Charles Prince famously put it, “As long as the music is playing, you’ve got to get up and dance.” Or, as John Maynard Keynes once wrote, a “sound banker” is one who, “when he is ruined, is ruined in a conventional and orthodox way.”

MAYBE IT WOULD HAVE been different if the people in charge had a better understanding of risk. Maybe it would have helped if Wall Street hadn’t turned VaR into something it was never meant to be. “If we stick with the Dennis Weatherstone example,” Ethan Berman says, “he recognized that he didn’t have the transparency into risk that he needed to make a judgment( THAT'S THE TICKET ). VaR gave him that, and he and his managers could make judgments. To me, that is how it should work. The role of VaR is as one input into that process. It is healthy for the head of the firm to have that kind of information. But people need to have incentives to give him that information( WHAT'S THEIR JOB? ).”

Which brings me back to David Viniar and Goldman Sachs. “VaR is a useful tool,” he said as our interview was nearing its end. “The more liquid the asset( YES ), the better the tool. The more history( YES ), the better the tool. The less of both, the worse it is. It helps you understand what you should expect to happen on a daily basis in an environment that is roughly the same. We had a trade last week in the mortgage universe where the VaR was $1 million. The same trade a week later had a VaR of $6 million. If you tell me my risk hasn’t changed — I say yes it has!” Two years ago, VaR worked for Goldman Sachs the way it once worked for Dennis Weatherstone — it gave the firm a signal that allowed it to make a judgment about risk. It wasn’t the only signal, but it helped. It wasn’t just the math that helped Goldman sidestep the early decline of mortgage-backed instruments. But it wasn’t just judgment either. It was both. The problem on Wall Street at the end of the housing bubble is that all judgment was cast aside. The math alone was never going to be enough.( TRUE )

Like most firms, Goldman does have other models to test for the fat tails. But even Goldman has been caught flat-footed by the crisis, struggling with liquidity, turning itself into a bank holding company and even, at one dire moment, struggling to combat rumors that it would be the next to fall.

“The question is: how extreme is extreme?” Viniar said. “Things that we would have thought were so extreme have happened. We used to say, What will happen if every equity market in the world goes down by 30 percent at the same time? We used to think of that as an extreme event — except that now it has happened. Nothing ever happens until it happens for the first time.”( LTCM. THE S & L CRISIS. )

Which didn’t mean you couldn’t use risk models to sniff out risks. You just had to know that there were risks they didn’t sniff out — and be ever vigilant for the dragons. When Wall Street stopped looking for dragons, nothing was going to save it. Not even VaR.

It's good post, with some good points, but it still didn't mention fraud or government guarantees, for instance. This pathetic view of math models is so silly that going over it again and again seems like overkill, but maybe it isn't. In the end, the math models didn't cause the problem. People did. Even the most basic explantion, that the models fooled people, I consider to be negilgence, since the inherent risk of leveraging is apparent to everyone, including people being paid millions for managing investments.

No comments:

Post a Comment